Previous Use of “Hare and Pineapple” Passage and Items

The Stanford 10 Form B, which contains the passage and the six multiple choice items, is used exclusively as a secure form. This means that this form is available only for state-wide or large district customers who agree to maintain security of the documents at all times. Between 2004 and 2012 the form was previously used in six other states and three large districts. In 2012, the only state-wide use of this form was in NY State. Until the events of this past week, we did not have any prior knowledge that the passage entitled “The Hare and the Pineapple” had any controversy associated with it from any prior use.

State administrations include:

• Alabama 2004-2011

• Arkansas 2008-2010

• Delaware 2005-2010

• Illinois 2006-2007

• New Mexico 2005-2007

• Florida 2006

Large District Administrations:

• Chicago 2006-2007

• Fort Worth

• Houston

Item Performance

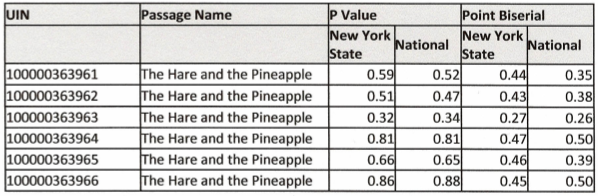

Item statistics are provided for the six items related to the Hare and the Pineapple, both based on New York state field test in 2011, and a representative sample at the national level (2002). As can be observed from the statistics on the following page, the items performed reasonably well. Based on the New York State students’ performance, item p values range from 0.32 to 0.86, indicating a good selection of easy and challenging items related the passage. The discrimination powers (based on point biserial values) of the items are also high, ranging from 0.27 to 0.47. The industry standard requires point biserial values to be higher than 0.20.

BackgroundtoSAT10Development

The National Research Program for the standardization of Stanford 10 took place during the spring and fall of 2002. The purpose of the National Research Programs were to provide the data used to equate the levels and forms of the test series, establish the statistical reliability and validity of the tests, and develop normative information descriptive of achievement in schools nationwide. Testing for the Spring Standardization Program of all levels and forms of Stanford 10 took place from April 1, 2002, to April 26, 2002. Testing for the Equating of Levels Program, Equating of Forms Program, and Equating of Stanford 10 to Stanford 9 took place from April 1, 2002, to May 24, 2002. Approximately 250,000 students from 650 school districts across the nation participated in the Spring Standardization Program, with another 85,000 students from 385 school districts participating in the spring equating programs. Some students participated in more than one program

Testing for the Fall Standardization Program took place from September 9, 2002, to October 18, 2002. Testing for the Equating of Levels Program, Equating of Forms Program, and Equating of Stanford 10 to Stanford 9 took place from September 9, 2002, to November 1, 2002. Approximately 110,000 students participated in the Fall Standardization and Equating Programs. Some students participated in more than one program.

The majority of individuals who wrote test items for Stanford 10 were practicing teachers from across the country with extensive experience in various content areas. Test item writers were thoroughly trained on the principles of test item development and review procedures. They received detailed specifications for the content area for which they were writing, as well as lists of instructional standards and examples of both properly and improperly constructed test items.

As test items were written, and received, each test item was submitted to rigorous internal screening processes that included examinations by:

• content experts, who reviewed each test item for alignment to specified instructional standards, cognitive levels, and processes;

• measurement experts, who reviewed each test item for adequate measurement properties;

and,

• editorial specialists, who screened each test item for grammatical and typographical errors.

The items were then administered in a National Item Tryout Program which provided information about the pool of items from which the final forms of the test were constructed. The information provided by the Stanford 10 National Item Tryout Program included:

• The appropriateness of the item format: How well does the item measure the particular instructional standard for which it was written?

• The difficulty of the question: How many students in the tryout group responded correctly to the item?

• The sensitivity of the item: How well does the item discriminate between students who score high on the test and those who score low? .

• The grade-to-grade progression in difficulty: For items trie~ out in different grades, did more students answer the question correctly at successively higher grades?

• The functioning of the item options: How many students selected each option?

• The suitability of test length: Are the number of items per subtest and recommended administration times satisfactory?

In addition to statistical information about individual items, information was collected from teachers and students concerning the appropriateness of the questions, the clarity of the directions, quality of the artwork, and other relevant information.

We trust this information is helpful to you. Please know that Pearson is ready to assist you and answer any additional questions you may have. As such, don’t hesitate to contact me at

Most Sincerely,

Jon S. Twing, Ph.D.

Executive Vice President & Chief Measurement Officer

Pearson